(or, what I did over summer vacation)

For the last few months, I have been working with the Bitbucket team at Atlassian. I switched over to this team at the beginning of the summer to help build a new inline commenting feature on pull requests and commit pages, to help make the tool more useful for code reviews in a team.

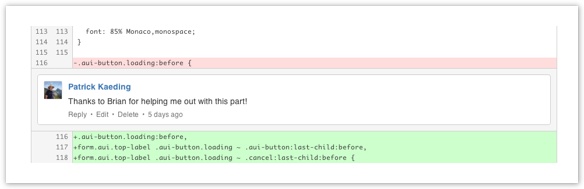

It was a great project, and I wrote up a story of how we built inline comments for pull requests and commits over at the Bitbucket blog. Check it out!

FIBS: Functional interface for Interactive Brokers for Scala

Announcing a small side project I have been hacking on: a Scala wrapper library for the Interactive Brokers TWS API. The TWS API uses a message-passing design, where you pass a message asking for a quote, for instance, and then you get a series of messages back, making up that quote. It is up to you to keep track of which response messages are referring which quote request, and the whole thing involves a lot of mutable state.

I, however, wanted to write an application staying as functionally pure as possible, with little or no shared mutable state, which could process many quotes at once. I wanted to do this using my favorite functional language du jour, Scala/scalaz.

So, I created FIBS, which is a terrible backronym that I am claiming stands for Functional interface for Interactive Brokers for Scala. It is in its infancy, and currently only supports the following operations:

There is much, much more in the API to flesh out. One of the other things I don’t like in the TWS API is that it is up to you to know which parameters are appropriate for the request you are making. (For example, only certain order parameters make sense for a given order type.) So, I want to enforce this logic with the type system. You, as the consumer fo my API, should never pass a null value because that parameter makes no sense in the context of what you are doing. The type system should enforce this.

Instead of waiting for various pieces of information to return, you will get a Promise or a Stream, or some other monad to allow you to move forward with your code while IB is doing its thing.

So, I am making progress on the API slowly, as I need it for my application. I wanted to make it public to see if anyone else was interested. I could also use some domain expertise in knowing exactly what parameters are appropriate, when. My experience as a quant is strictly amateur.

Check it out on Bitbucket!